Cassandra Internals Architecture

Here, We’ll be looking at how data is written, read, updated, and deleted in Cassandra. Cassandra is a horizontally scalable NoSQL database.

Before we talk about how does Cassandra maintain data, we first need to understand basic terminologies:

- CommitLog: This is an append-only log for all changes local to the Cassandra node. Any data written to Cassandra will first be written to a commitlog before being written to a memtable. It helps to restore the data in case of any node failure. CommitLog provides optimization in data upsert operations. Cassandra does not need to flush every update to disk without thinking of data loss in case of failure. You may be wondering if Cassandra has to write updates to disk only then why not SSTables directly? The CommitLog is optimized for writing. Unlike SSTables which store rows in sorted order, the CommitLog stores updates in the order which they were processed by Cassandra. The CommitLog also stores changes for all the column families in a single file so the disk doesn’t need to do a bunch of seeks when it is receiving updates for multiple column families at the same time.

- MemTable: A memtable is maintained per CQL table. It has a configured memory size, after reaching that size, memtable is flushed to disk to a new SSTable. Memtable is an in-memory cache with content stored as key/column. Memtable data are sorted by key. It also contributes to Cassandra’s read operations. There will be only one active memtable per CQL table, others could also be present which are waiting to be flushed.

- SSTable: It is an immutable data file used to store permanently store data on disk. There is one active SSTable per CQL table. A new SSTable is created when a memtable data is flushed. One partition’s data can be across multiple SSTables and one SSTable can have data for multiple partitions. Sorted String Table is a file of key/value string pairs, sorted by keys. An SSTable can be completely mapped into memory, which allows us to perform lookups and scans without touching disks.

- Compaction: A CQL table data is the combination of its memtable + all its SSTables on disk. In order to read the data from a CQL table efficiently, there is a need arises to compile all the SSTables for the given CQL table into one and this is done by a process known as Compaction. When does this compaction happen? There are 3 ways to do compaction: a) Size tiered: this is the default way for compaction in Cassandra. It is best to use this strategy when you have insert-heavy, read-light workloads. b) Level tiered: It is best suited for read-heavy workloads that have frequent updates to existing rows. c) Time window compaction: It is best suited for time series data along with TTL.

At time T5, Compaction happens which deletes SSTables 1, 2,3, and creates a new one (4). It also helps in Cassandra’s read path by reducing the number of SSTables for lookup.

5. NTP: It becomes important to discuss here. It’s a networking protocol for clock synchronization between machines. If it is not enabled Cassandra will not be able to identify the latest data for a row. Thus, Compaction will also not be able to function properly.

SSTable in detail

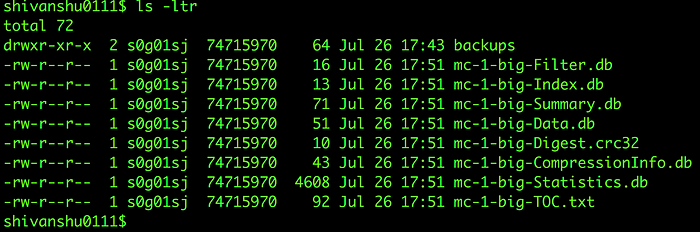

Below is the snapshot for SSTable:

It’s a snapshot of the directory of a CQL table. It is located at LOC_TO_CASS_DATA/data/keyspace_name/table_name/. None of these files are human-readable except the TOC.txt which contains the list of components for the SSTable.

a) Filter.db: The filter file stores the row keys bloom filter. It is stored on disk so that the bloom filter comes alive after restart of the node.

b) Index.db: The index file contains the SSTable Index which maps row keys to their respective offsets in the Data file. Row keys are stored in sorted order based on their tokens. Each row key is associated with an index entry which includes the position in the Data file where its data is stored.

c) Summary.db: The summary file contains the index summary and index boundaries of the SSTable index. The index summary is calculated from the SSTable index. It samples the row indexes that are index_interval (Default index_interval is 128) apart with their respective positions in the index file. Index boundaries include the start and end row keys in the SSTable index.

d) Data.db: This file contains the actual data, All the rows contents will be persisted here.

e) CompressionInfo.db: This file contains the compression metadata information that includes chunk size, compression data lengths, etc.

f) Statistics.db: This file contains information about the partition used to generate partition keys, histograms about estimated row keys and estimated column counts, the ratio of compressed to uncompressed data, list of all SSTable generation numbers from which this SSTable is created, etc.

Cassandra write path

Cassandra writes are extremely faster than the traditional RDBMS database. The reason for faster writes is its storage engine. Cassandra uses Log structured merge(LSM) trees whereas the Relational database uses B+ trees data structure to store data. Cassandra doesn’t care about the existing value of the column which is being modified, it completely skips the reading part and creates a record in memory including column and updated value. It then groups inserts and updates in memory, and at intervals, sequentially writes the data to disk in append mode. With each flush, data is written in immutable files (SSTable) and is never overwritten. As a result, as it skips scanning of long data files and again adding data to the same long datafiles, writes take significantly less time in Cassandra.

The client connects with a node(becomes coordinator node) in the Cassandra cluster. The coordinator node identifies the correct set(depends on the CL) of nodes for the data. After being directed to a specific node, Data is written in the commitLog(on disk), then the same data is added in the memtable(in-memory) in the sorted order of partition key. At some point (for instance, memtable is full), Cassandra flushes the data to a new SSTable on disk, and memtable and commitLog will be purged to free up the space to reuse.

Cassandra write-path within a node. Memtables and SSTables belong to the target CQL table only.

How does a row update work in Cassandra?

Cassandra treats each new row as an upsert: if the new row has the same primary key as that of an existing row, Cassandra processes it as an update to the existing row. It is possible that many versions of the same row may exist in the database. This is why Cassandra performs another round of comparisons during a read process. When a client requests data with a particular primary key, Cassandra retrieves many versions of the row from one or more replicas. The version with the most recent timestamp is the only one that is returned to the client (“last-write-wins”).

How does a row delete work in Cassandra?

Cassandra treats each deleted row as an upsert only. The deleted row will be marked as a tombstone. Tombstone is a marker in a row that indicates a column is deleted and when compaction occurs, it will delete the column from the row as mentioned in the second figure.

Cassandra read path

Cassandra read path is quite complex as it has more number of components involved in the path. Cassandra read is a result of the active memtable and all its SSTables on disk. Before we go to the read path, we need to understand all its components first.

- Memtable: It is already described above.

- Row Cache: It stores the frequently accessed rows in the cache to improve the read performance. The row cache size is configurable, as is the number of rows to store. It uses LRU eviction policy to evict rows from the cache when the cache is full. The row cache is not a write-through. If a write comes in for the row, the cache for that row is invalidated and is not cached again until the row is read.

- Bloom Filter: It is used to identify whether a row is present in a SSTable or not. It is stored in fully off-heap memory using a new implementation that relieves garbage collection pressure in the JVM. It tells surely if the row does not exist in any SSTable, but it gives false positives also.

- Partition key Cache: It is used to store the partition key. If the partition key is found in the cache, it can go directly to the compression offset map to find the compressed block on disk that has the data. The partition key cache size is configurable, as is the number of partition keys to store in the key cache.

- Partition Summary: It is used to store the index for the partition index file (it is an index for the index of the actual data). It resides in in-memory to identify the block of the partition index to have an efficient read.

- Partition index: It is used to store the index for all the partition keys. It resides on disk. Once Cassandra gets the block from partition summary, It seeks in partition index for that block to identify the particular index of the partition key.

- Compression Offset Map: It is used to store the exact location of the data on disk. It is used by either partition key cache or partition index.

Note: Caches are also written to disk so that it comes alive after a restart.

Now, Let’s see the read path:

- Read memtable, If the data is available, read it and merge it with the data from the SSTables.

- Read row cache (If enabled), It data is there, return it, else go to the next step.

- Check the bloom-filter, If it says, data do not exist, return the memtable data only (if found). If it says, data is present in SSTable, go the next step.

- Read the partition key cache (If enabled), if the partition key is found in the cache, then read the compression offset map for the value found in the cache to get the exact location of the data on the disk. Suppose the partition key is not found in the cache, go to the next step.

- Read partition summary cache, It tells the index for the partition index data.

- Read the offset from the partition summary to find the compressed block on disk that has the data.

- Locates the data on disk using the compression offset map.

- Fetches the data from the SSTable on disk.

Cassandra read-path in a single Cassandra node

Some important points to remember while working with Cassandra:

- The replication factor must be greater than one. 3 is the recommended RF for a production cluster.

- Check the compression, compaction, bloom filters, and cache settings and configure as per your requirements.

- Set your Consistency level for the cluster, Consistency level can be set for each write and read at the client as per the use-case.

- Make sure capacity should be maximum utilized half of the total available capacity.